AI in healthcare

Subtitle: The secret to unlocking AI’s potential in healthcare

By Olivia Geen, MD, MSc, FRCPC

| 35 min read |

AI in healthcare isn’t a new concept. It’s been in the news for a while. People have been working on it for decades, and with the recent release of Open.AI’s ChatGPT, AI came front and centre into the homes of many non-AI aficionados, prompting a resurgence of the question: can AI fix healthcare?

There have been some success stories. AI is now used routinely and with great accuracy in reading ECGs and certain imaging modalities like CTs and MRIs. There are exciting new platforms that are posed to reduce documentation burden (like Osler and Abridge).

“Artificial Intelligence (AI) seeks to make computers do the sorts of things that minds can do”

There are also failures - Babylon in the UK has gotten some blow-back for its family-doctor AI algorithm, GP at Hand, which is said to sometimes diagnose inaccurately or ignore red-flag symptoms.

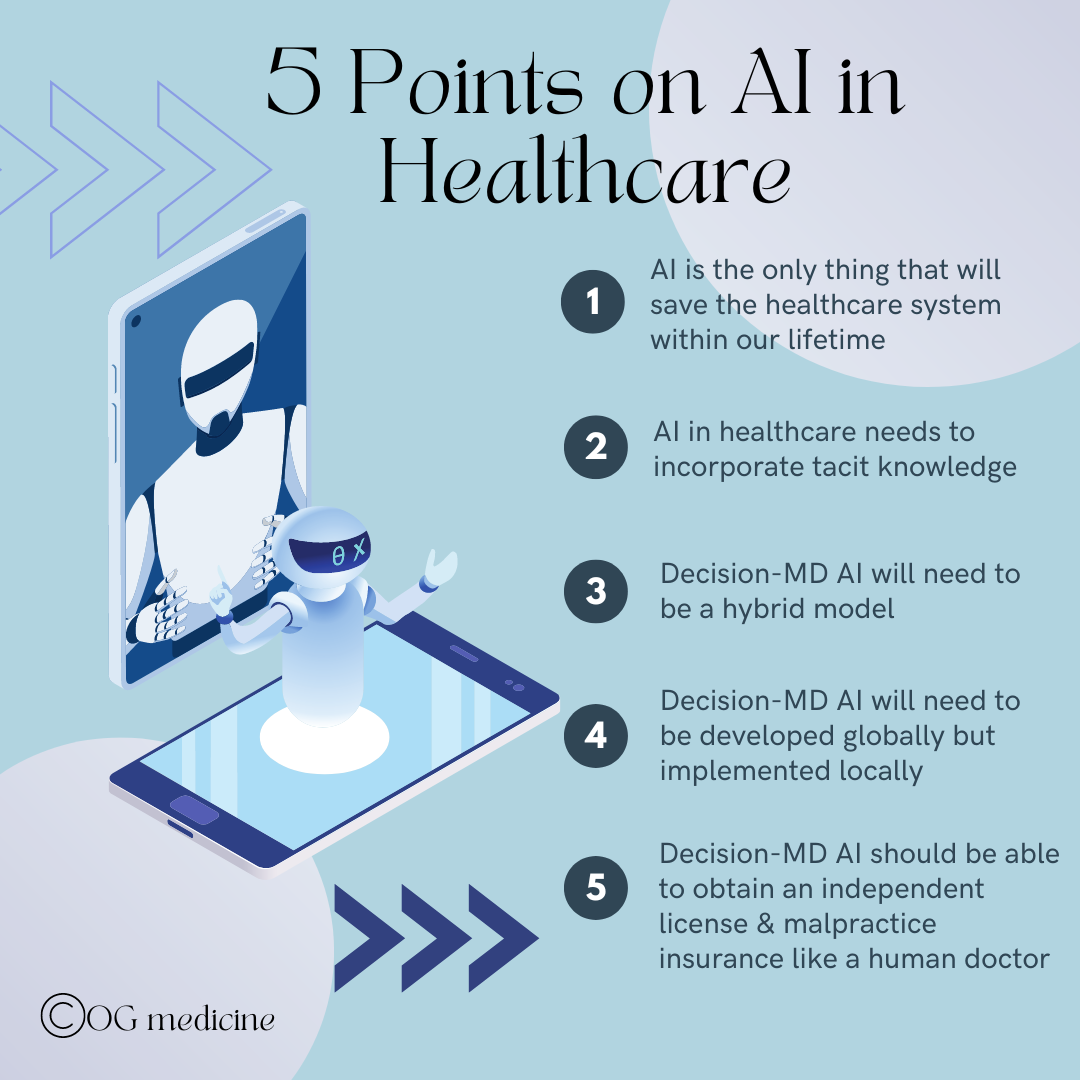

Personally, I think AI is the only thing that will save our healthcare system within our lifetime. In this article I’ll talk about how AI relates to physicians specifically, omitting for now the other ways AI can be used across the healthcare sector.

HOW CAN AI HELP DOCTORS?

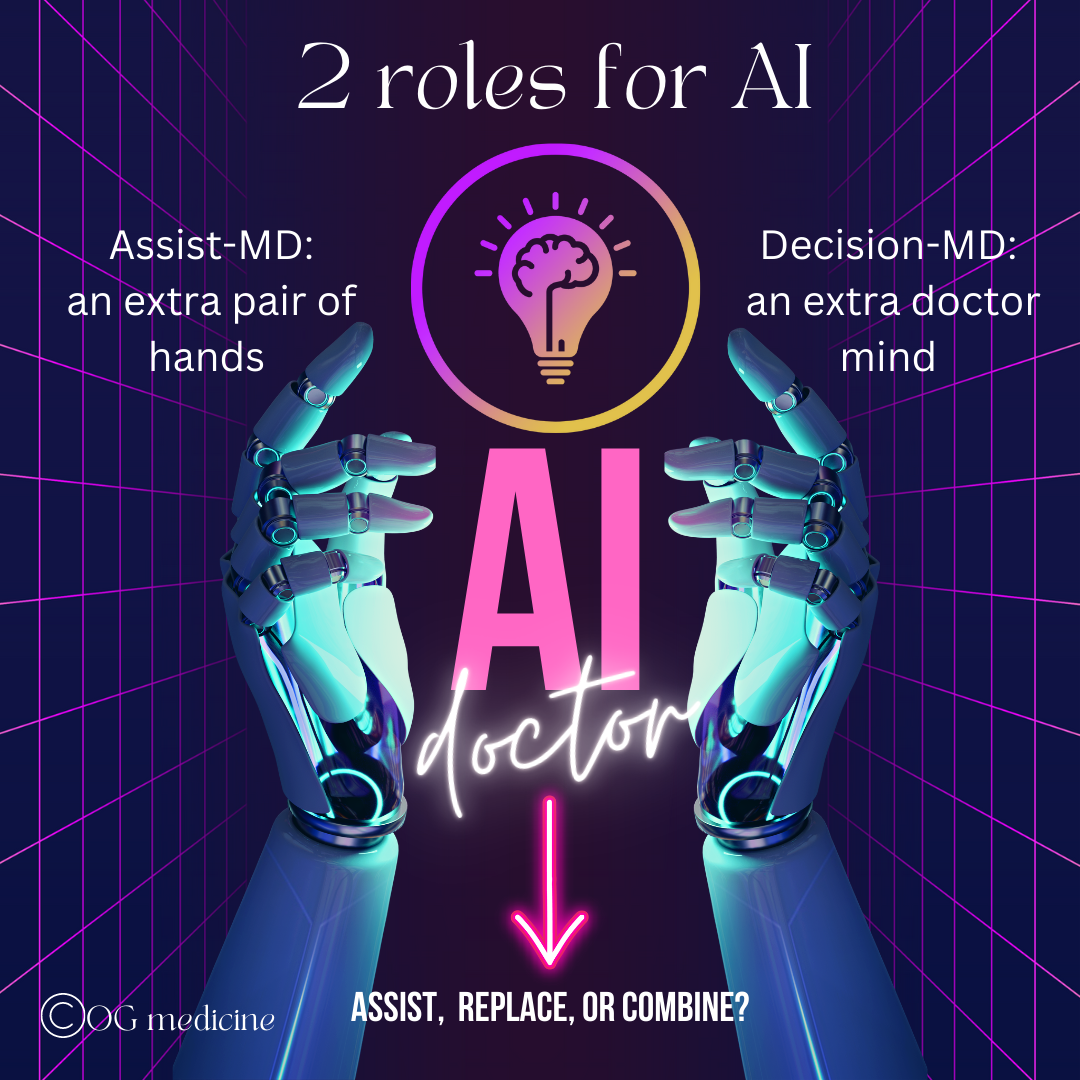

Before we get into the 5 points, it’s important to say a quick word on the ways in which AI is being/will be used in healthcare by physicians. In simplistic terms, this can be broken up into two categories:

Assisting the doctor: making the day-to-day easier, but leaving all the clinical decision making to the doctor (what I’ll call assist-MD AI)

this includes things like AI-documentation, AI-scheduling, AI-tracking of investigations, and off-loading other admin tasks

this helps healthcare by reducing the demand on the doctor’s time and thereby indirectly increasing the supply of doctors

Replacing the doctor: replacing either fully or in part the clinical judgement of the doctor (what I’ll call decision-MD AI)

this includes things like augmenting decision making (clinical decision support tools), interpreting investigations (test, ECGs, images) and of course the holy-grail; replacing the MD entirely with a patient-facing diagnostic AI.

this helps healthcare by directly increasing the supply of doctors (either in part or full)

Given the breadth of this topic, the majority of my points will address the more controversial and elusive decision-MD AI, leaving assist-MD AI for a future post.

Now, a quick word on the creation of AI and why I think my ideas hold water.

AI in healthcare for physicians has 2 primary roles - assist the doctor (what I call assist-MD AI), or replace in full or in part the doctor’s medical decision making (what I call decision-MD AI). This article focuses primarily on the later.

INNOVATION COMES FROM ANYWHERE

The truth is, I am not a computer engineer. I don’t have all the information when it comes to AI. Yet, it’s also true that no one has all the answers in a rapidly evolving field. Creating something new in healthcare is inherently collaborative work, where physicians do have a role.

When AI was first created, it came together out of the collaboration of people from many different backgrounds - there were the usual early suspects like Lady Ada Lovelace and Alan Turing, but later there was also neurologist/psychiatrist Warren McCulloch, mathematician Walter Pitts, anti-ballistic missile designer Norbert Wiener, experimental psychologist Kenneth Craik, neurologist William Grey Walter, engineer Oliver Selfridge, psychiatrist and anthropologist Gregory Bateson, chemist and psychologist Gordon Pask, and many, many others.

What I see in that list is a group of people creating something new by combining different kinds of knowledge. When you combine different views within a single individual - something called a boundary spanner, like Gordon Pask and Gregory Bateson - you start to get great leaps in innovation. Innovation is just another word for creativity, and creativity is just another way of saying connecting the dots. If you can see more dots than most people, you’ll invent things that no one else could see.

So, I may not be a computer engineer, and I may write things about AI that others will disagree with from different fields. I’m okay with that. My ideas are created out of the many world-views I hold as a doctor in medicine, working in an academic institution where I train human doctors (medical students and residents), educated in translational health science, with a smattering of understanding in philosophy, entrepreneurship, design, and the human brain as a geriatrician who specializes in the destruction of our mind (dementia). This gives me a unique view on theorizing how to construct a new mind (AI) in healthcare.

Without further ado, here are my 5 opinions on AI in healthcare:

1) AI IS THE ONLY THING THAT WILL SAVE THE HEALTHCARE SYSTEM WITHIN OUR LIFETIME

I’ve written in other posts that the greatest problem in medicine is capacity. Almost all the other problems can be linked back to this one central issue. There are not enough people to do the job well, and the job is really complicated.

During my residency, I remember a renowned internist telling me that during his own training they used to admit people to hospital to start an ACE inhibitor. This is now a medication that is started in family doctors’ offices without a second thought. Now we admit people with over a dozen medical co-morbidities, 20+ medications, and complex biopsychosocial situations.

At the same time that the complexity of our patients has increased, the complexity of our medical solutions have as well. The cardiology guidelines feel like they are updated every 6 months, the hypertension guidelines have about a dozen different ways to diagnose hypertension using different modalities and in different patient populations, and there seems to be a new drug for diabetes every time I turn around. Another attending, an ICU intensivist, once told me that when he trained, they used to carry around a textbook in their white-coat pocket, because one textbook was all you needed. That’s all there was. We didn’t know that much.

Now, we have apps like UptoDate in our pockets on our smart phones, with an endless quantity of detailed information. The totality of medical knowledge now doubles every 73 days. It is impossible for any one person to know it all. This is why we now have increasing specialization; gone are the days of a generalist, because there’s too much for a generalist to know. We now have generalists for each organ system, like a gastroenterologist who then further specializes into hepatology, ERCP, IBD, and the list goes on.

Family doctors, general internal medicine, and general surgeons are probably the last vestiges of generalists, but even here, we’re starting to see the limits of the human mind. Stephen Hawking wrote in his book Brief Answers to the Big Questions that the invention of the written language has meant we can now transmit enormous amounts of information from one generation to the next, and it is growing at an exponential rate. Yet, as Hawking writes “the brains with which we process this information have evolved only on the Darwinian timescale. This is beginning to cause problems…no one person can be the master of more than a small corner of human knowledge”.

“No one person can be the master of more than a small corner of human knowledge. People have to specialise, in narrower and narrower fields. This is likely to be a major limitation in the future.”

What Hawking didn’t say, is that this change in the system is natural and expected because we are part of a complex adaptive system (CAS) (a future post will go into complexity further, so make sure you subscribe now). You can think of CAS like an ecosystem, where everything is connected, always changing, and developing more complexity overtime (the human body, the healthcare system, the stock market, etc). This increasing complexity and subsequent need for self-organization into specializations is inherent to the nature of these systems. There’s nothing amiss with the explosion of complexity that we’re seeing - this is just how it goes.

Thus, we have an inevitable, foreseeable ongoing challenge with a limited supply of doctors and a limited ability to incorporate all the information we’re discovering. Fundamentally, this is a capacity issue.

When you have a capacity issue, you only have two options

increase the supply, or

decrease the demand.

An AI that can diagnose and treat (make complex medical decisions) can also reduce human error (our brains cannot know all that there is to know), and reduce the use of unnecessary drugs and tests.

Reduce Demand

Reducing healthcare demand is possible through preventative medicine. In a separate post I’ll go into this in more detail, but suffice is to say that almost all chronic diseases - the things giving people a dozen diagnoses and 20+ medications - can be prevented with diet, exercise, and emotional health. However, the kind of preventative medicine initiative, or public health campaign, that would be needed to turn the ship around of our current lifestyle is astronomical and would take 50+ years to see the downstream effects in our healthcare system. This is still an option, but it won’t solve the problem in our lifetime.

Okay, what if we reduce complexity? What if we just stop doing so much medicine stuff. Well, for one, complex adaptive systems get increasingly complex; reducing complexity is extremely difficult. Humans also don’t like giving things up; we have a heuristic (or unconscious response) to the idea of losing something called “loss aversion”. We feel twice as much psychological pain at the idea of something being taken away as the pleasure we feel at the idea of gain. I cannot see a time where we collectively say - yes, let’s stop doing 50% of the things we’re doing and accept the consequences, whatever they may be.

Increase Supply

Unfortunately we can’t increase the supply of doctors in the traditional way because we can’t train doctors fast enough, and even if we could, our human brains are being eclipsed by the amount of knowledge we’ve created. We also can’t keep funding more resources - in Canada our healthcare system already accounts for 11% of our GDP. For a long time now, it’s been said that our system is unsustainable - how will funnelling in more money help? And of course, even if we had more physical resources, we still haven’t solved the problem of not enough human resources (doctors) to use them.

Thus, there are only two ways forwards to avoid impending collapse of our complex system - either reducing demand through preventative medicine (which will take several generations) OR finding a way to increase the supply of doctors in a way that also addresses the vast reams of information that’s growing at a rate surpassing the human mind. Hmmmm… that sounds very much like a role for AI.

“There is no time to wait for Darwinian evolution to make us more intelligent...””

Hopefully I’ve made the case that AI is our only option in this lifetime, unless we want to accept the collapse of our complex current healthcare system and rebuild it anew (this would look and feel like an apocalypse, so not very ideal).

The next step then, is to build an AI that can actually deliver. Why don’t we already have an AI that can diagnose and treat us as well as a family doctor or specialist? Point number 2 will explain.

2) AI IN HEALTHCARE NEEDS TO INCORPORATE TACIT KNOWLEDGE

AI has influenced and was influenced by psychology and neuroscience. Understanding how the brain works helped developers understand AI, and vice-versa. If we are trying to develop an AI that will mimic a physician mind, then we need to understand how doctors think.

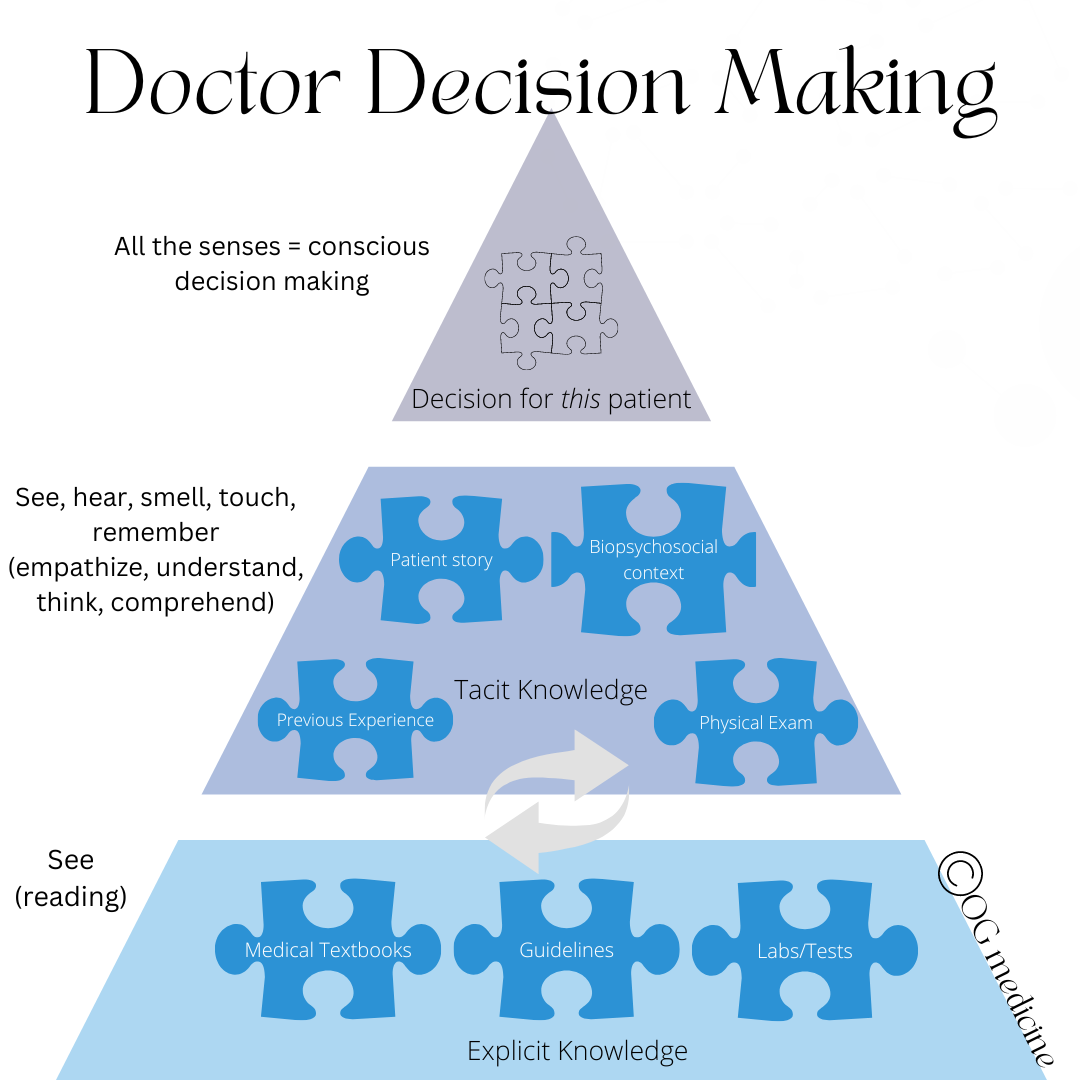

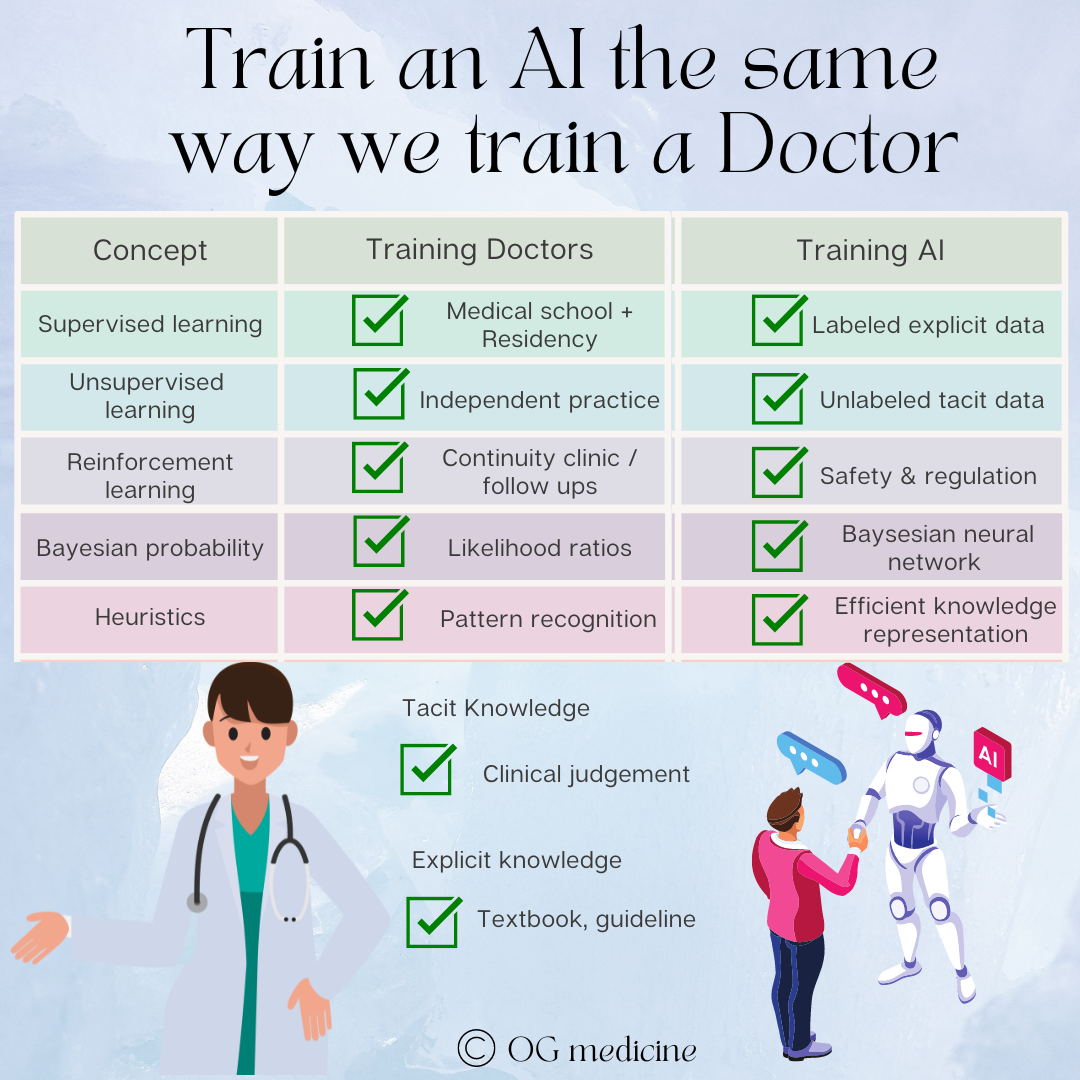

Doctors think using “mindlines” (Gabbay and le May, 2004). Mindlines, roughly speaking, are the combination of explicit knowledge (information that can be easily written down, like the medical facts found in a textbook) and tacit knowledge (information that is fuzzy, hard to describe, and is gathered through experience using our 5 senses and memory). These two sources of information are combined to make a clinical decision.

This is where I believe AI in healthcare has fallen flat. To train AI, you need a dataset. Most AI’s that are developed to help diagnose and treat (clinical decision support) or replace a doctor (Babylon’s AI), involve neural networks that are trained using deep-learning on large, existing datasets, most notably electronic medical records.

For anyone who has ever documented a clinical encounter in a note before, you can instantly see the flaw in this plan. The written record is an incomplete, parsed down highlight reel of a clinical encounter. It can be more or less accurate depending on the author, the time allotted, use of the copy-and-paste function, and a whole host of other factors.

This means the record doesn’t record tacit knowledge. The family doctor doesn’t write “their shirt was buttoned incorrectly and was inappropriate for the season, and this added to my clinical gestalt that this person has dementia”. Instead, the family doctor writes something like “wife concerned. forgetting to pay the bills. suspect dementia”. The family doctor likely noticed, unconsciously, this detail about the patient but they won’t put it into words in the chart - it’ll be the gestalt or “gut feel” of the 5-10 minute appointment where they end up thinking dementia and not delirium, medication effect, depression, or some other reason for memory changes, which explains why they decide to order certain investigations and prescribe certain medications. They take explicit information, combine it with a mad rush of other tacit information, and come up with a clinical judgement.

“We know more than we can tell”

The more experienced the physician, the more their clinical diagnosis and decisions are made on tacit knowledge. The more inexperienced, the more labour intensive data-gathering, explicit-based the diagnosis becomes. I remember handing over a patient to my staff attending, a brilliant internist, in the morning after a 24 hour night on call. I had spent a few hours asking a million questions, combing through the medical record, writing down all the lab tests, gathering reams of data…. and my attending pulled back the curtain (the patient was still in the ER), took one look at the patient, and yanked the curtain closed saying, “Yep. Heart failure”.

How did he know?? As an attending now myself, I can guess that he took in the fuzzy knowledge in the room (tacit) - he inferred the patient’s age from their appearance - 80s (higher risk for CHF) - how he was sitting in bed - upright (positional dyspea)- that he was wearing oxygen (hypoxia) - that his feet sticking out from under the hospital blanket were puffy (peripheral edema) - and that he was overweight (likely CAD causing HFrEF). In a milisecond, all this information poured into the mind of the attending, combined with the mastery of explicit knowledge (epidemiology, pathophysiology, and clinical presentation of heart failure), to make an accurate clinical decision.

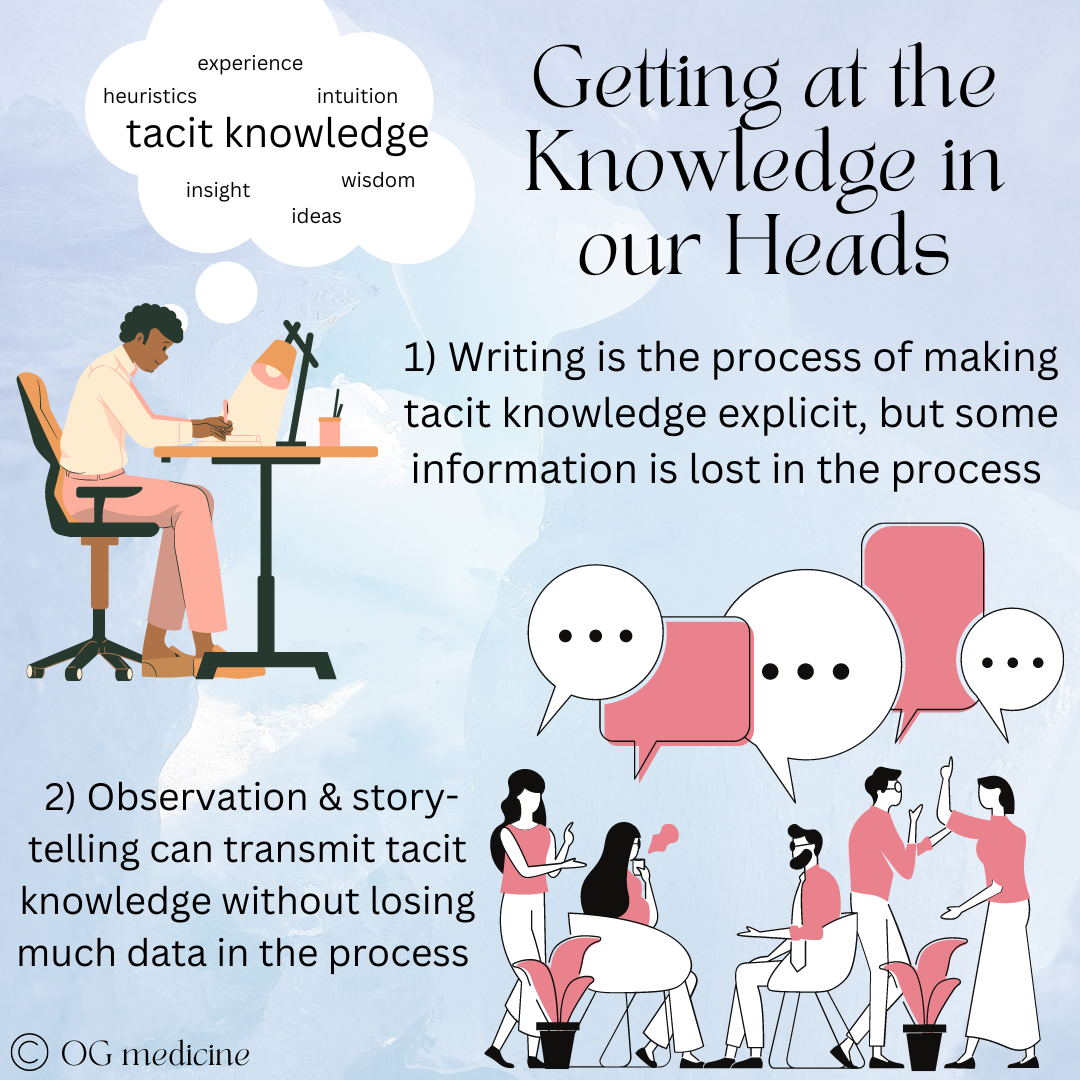

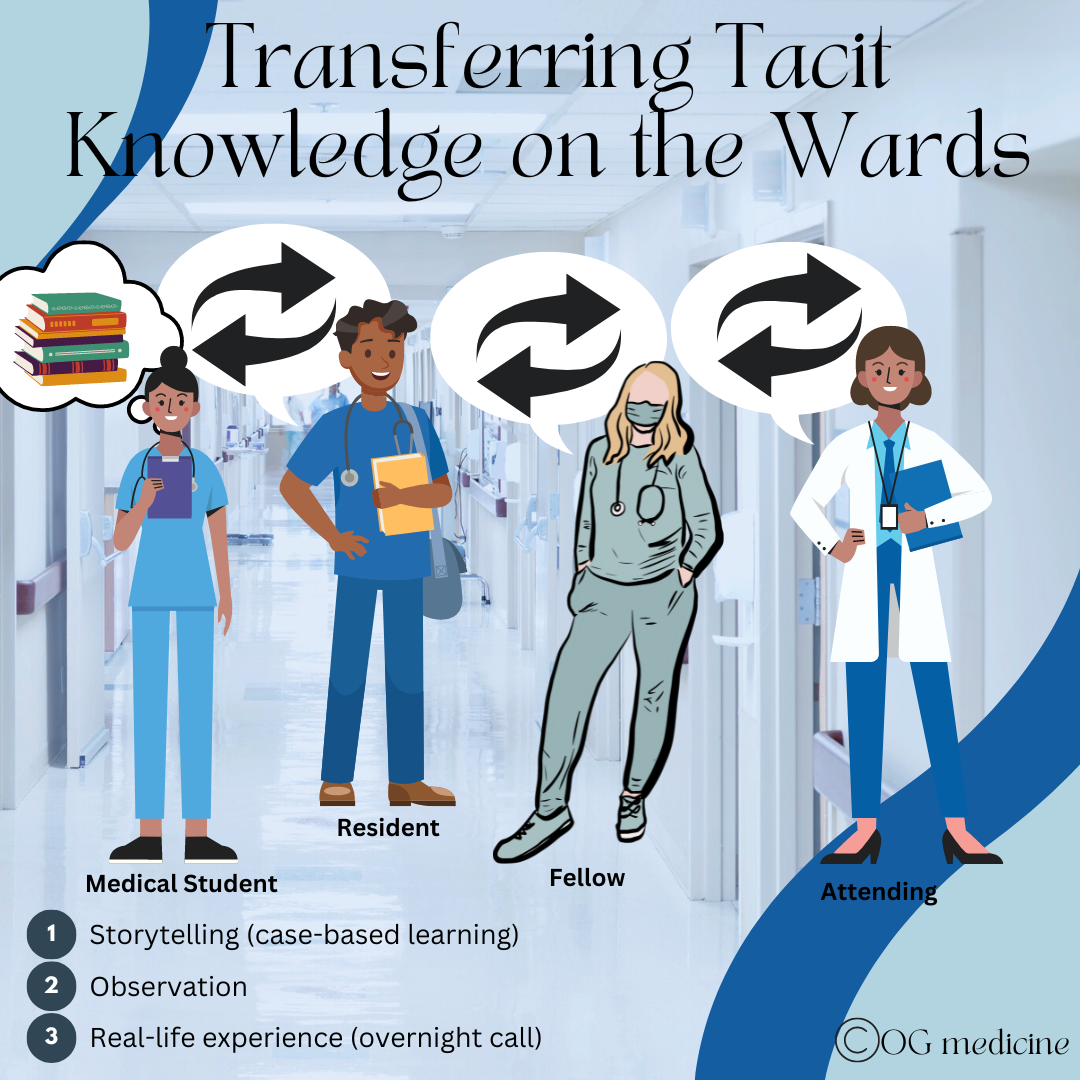

Tacit knowledge incorporates a whole range of “fuzzy knowledge”; the things that are hard to write down (but can be written, becoming more data-limited in the process). Tacit knowledge is not routinely recorded in medicine, but is transferred between physicians through observation, story-telling, and personal experience.

Through residency we gather tacit knowledge through the process of experience, observation, and storytelling (“so I have this patient…”), and transmit this knowledge amongst ourselves using these same tactics. The R1 observes the R2 placing an arterial line before doing one themselves, leading to the adage “see one, do one, teach one”. You could read every textbook on the planet about arterial lines, but you won’t know how to do it until you watch, learn, and try.

Over the years, we build up our tacit knowledge dataset inside our minds. This is the difference between an R1 internal medicine resident and an R5, and an R5 and a 10-year physician. Despite studying textbooks in medical school, you don’t actually “know” how to be a doctor until you’ve gone through residency, which is essentially 2-5 years of tacit knowledge gathering (with some additional explicit knowledge learning).

This also explains why if doctors stop gathering explicit knowledge over their careers, and just rely on tacit knowledge, their clinical judgement can start to get warped by one-off bayesian learning (“I had this patient once who x, so now I never y”). Both explicit and tacit knowledge are necessary for the best clinical decision making.

This means that we need to train our AIs the same way we train our doctors. We need to give them a scaffold of explicit knowledge by pre-training them on textbooks and guidelines like medical school, and then we need to fill up the scaffold with tacit knowledge through story telling and observation, like residency. We also need to make sure that they continue learning both explicit and tacit knowledge, which means updating their explicit scaffold as new guidelines become available.

The problem is that tacit knowledge isn’t collected in medicine in large enough quantities to form a dataset for AI. We need to be documenting clinical encounters in a whole new way. Until we start collecting and using this tacit knowledge, AI will not be able to make decisions at the level of physicians. AI will make decisions at the level of a medical student, trained off explicit, textbook-based medicine.

If you are still in doubt, consider where AI in healthcare has been successful. Places where explicit, written, discrete data points are available (ECGs and MRIs) have been effective for years, while places where fuzzy, context-specific tacit knowledge is required, like a family doctor’s visit, is still out of reach. Even clinical decision support tools - which aim to only partially “replace” a doctor - tend to be clunky and provide inaccurate or irrelevant recommendations limiting their uptake by physicians.

If we train AIs on tacit data (video recordings, observation, conversations), not just on explicit data (written patient records in electronic medical records) we will be much closer to an intuitive AI that mimics a doctor, not a rudimentary AI that mimics a medical student.

3) DECISION-MD AI WILL NEED TO BE A HYBRID MODEL

In my readings and discussions with machine-learning students at Oxford it appears there’s some debate between the different “kinds” of AI. Largely, this can be broken into symbolic AI, which uses logic and IF-THEN rules, and cybernetic AI, which uses things like neural networks. In the 1960’s there was such a clash that cybernetic AI was actually almost completely de-funded, and it wasn’t until the 1980’s that neural networks gained traction again. Now, the focus is of course all about machine learning and neural networks like ChatGPT.

However, when it comes to complex AI ambitions like partially or fully replacing a doctor, I am on the side of those like Allen Newell, Stan Franklin, Marvin Minsky, Aaron Sloman, and others, who believe in “hybrid-AI” as the key to creating “whole-mind AI”.

“Whole-mind AI” is used in computing to describe artificial general intelligence (AGI). Unlike specialist AI, AGI is able to do many tasks, not just one specific task (like language, vision, learning, etc). Replacing a family doctor is a very general task, and so when discussing decision-MD AI, we are talking about creating an AGI, an achievement which has been notoriously out-of-reach across all disciplines, let alone medicine.

“Difficult though it is to build a high-performing AI specialist, building an AI generalist is orders of magnitude harder. Deep learning isn’t the answer: its aficionados admit that ‘new paradigms are needed’ to combine it with complex reasoning - scholarly code for ‘we haven’t got a clue’”

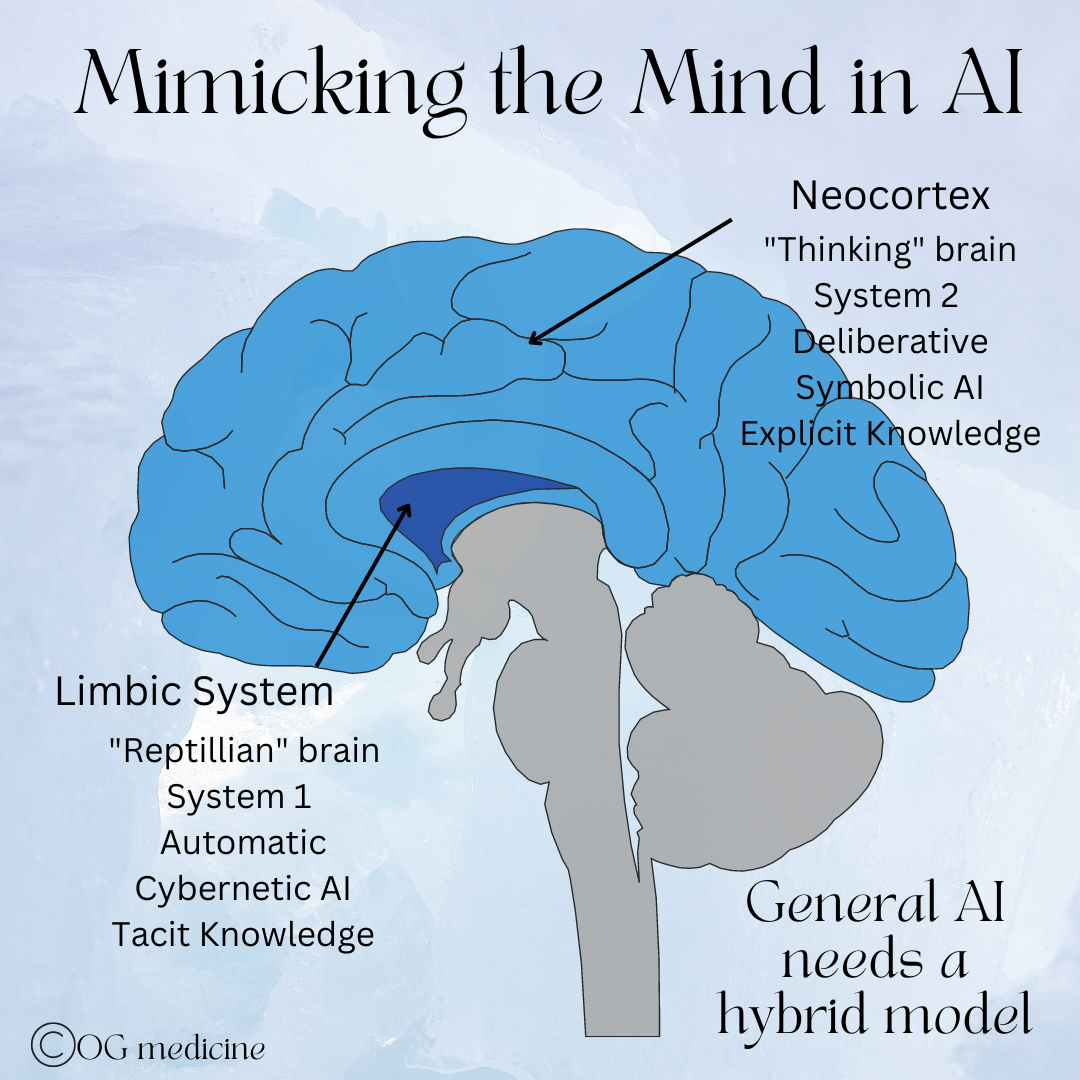

From my standpoint as a physician, it seems likely that the combination of two different “operating systems” (symbolic and cybernetic AI), will be necessary to achieve AGI because our brains also use two different “operating systems” to make decisions (neocortex and limbic systems).

This is a highly technical topic, and as a doctor, my goal is not to write a masters level thesis as though I am a computer programmer. My goal is to point out some patterns that I see across disciplines, and apply that to what I know about how doctors think.

So here’s my doctor-version of what AI techniques are needed, outlined in long-form thought to “show-my-work” along the way.

A) Replicating how we think

First, very simplistically, our brains have two systems. There’s the deliberative portion - the neocortex or system 2, as it was called by Daniel Kahneman - which does “higher-level” functions like critical thinking, judgement, attention, etc. This part of the brain is slow in processing speed relative to System 1, and is therefore reserved (without us realizing it) for when we need to concentrate, fact-check, verify, and act more carefully. Think of it as the “loading mode” of wifi back in the day. While it seems like we’d make better choices if we were always thinking “clearly”, we simply can’t use System 2 all the time as we wouldn’t be able to move through life efficiently.

This is why life started out with System 1, which works extremely quickly. System 1 is the “reptilian brain” part of our brain, also called the limbic system in medicine today. It uses things like pattern recognition, heuristics, and emotion to respond to the world intuitively, and therefore quickly. It takes short-cuts in the data, jumping to conclusions based on past experiences and tiny pieces of information, rather than deliberatively looking at each data point intently. These short-cuts can sometimes arrive at the wrong answer, but at least it gets an answer with the necessary speed to survive (hey - a lion! Run!…oh wait, it’s just a bush moving).

B) Comparing this to cybernetic and symbolic AI

Neural networks are like our limbic system. They use parallel-processing of many individual units (like a brain using many neurons), and are able to contend with changing probabilities. They can “learn patterns, and associations between patterns, by being shown examples instead of being explicitly programmed” (Boden, 2018). They observe, to learn. That is how human residents learn too.

They can also recognize “messy” (i.e. fuzzy) evidence. They can make sense of conflicting evidence, which is required in medical decision making. The backpropagation that is often used to train neural networks (supervised learning) is also just like handover in the morning, when the attending reviews the case and teaches you where you messed up, or what you got right.

C) Applying this to healthcare

While all this sounds great, neural networks in healthcare are a) not being trained on the right kind of data (using explicit language-based medical records, rather than the necessary tacit knowledge), and b) they are in danger of seeing patterns where they don’t exist - known as “hallucinations” - just like our limbic system.

This is why it will be imperative in healthcare to have an explicit system, like our neocortex or a rule-based AI, that can be triggered to double check the work of the neural network from time to time.

Why not just use a rule-based AI you ask? Once again, rules are explicit and lack the tacit knowledge that allow doctors to diagnose disease across an infinite number of disease permutations, and it takes too long to train and compute, requiring vast energy stores to process information (just like our neocortex, which uses a significant portion of our daily glucose). Despite the limitations of rule-based AI, I believe it will be essential to AGI. After all, when is the answer to problems in life not a balance between two extremes?

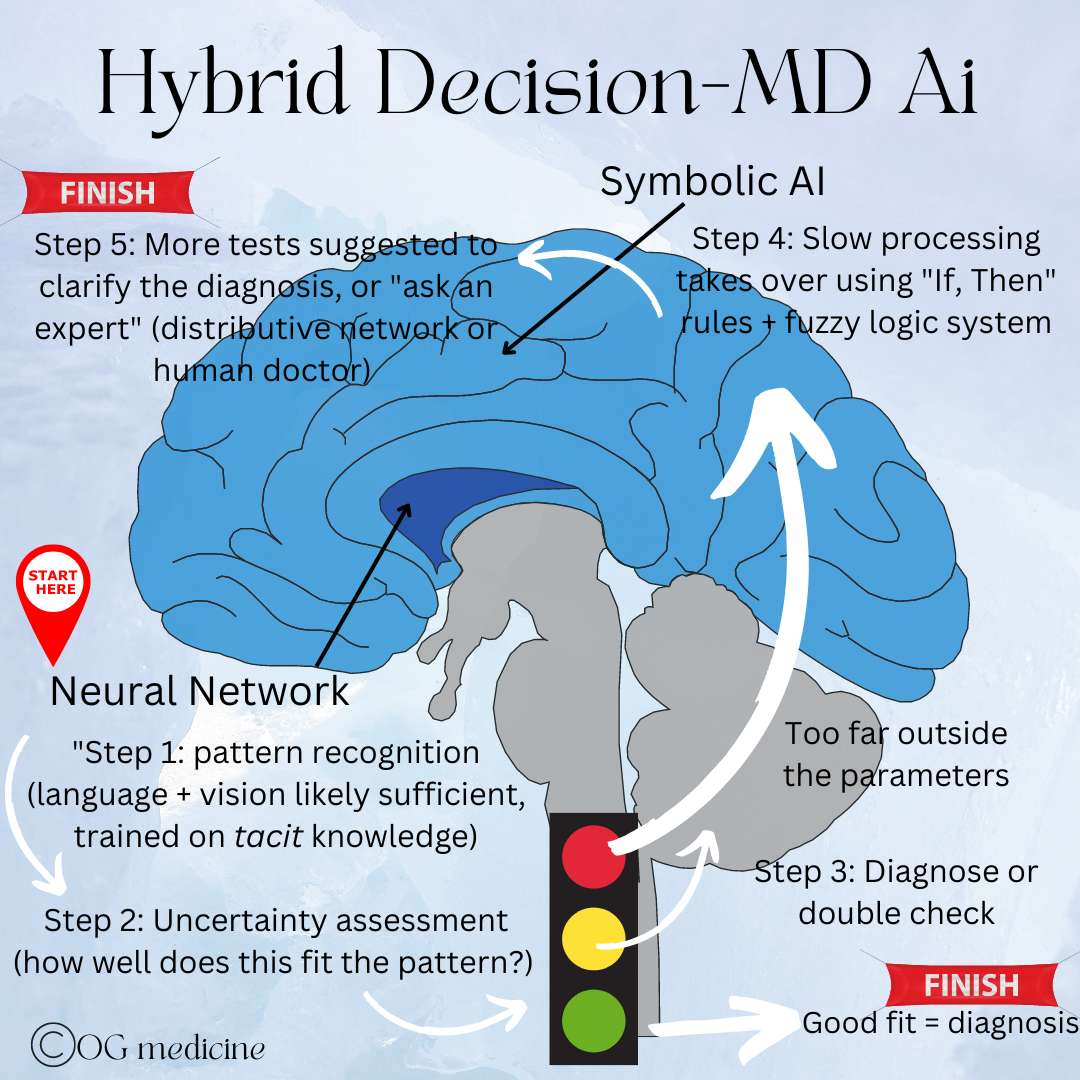

I therefore suggest that the paradigm shift called for to create AGI is as follows:

A hybrid model in which the neural network is trained on tacit knowledge and responds first to a disease problem, using pattern recognition to interpret new data.

If the situation is too far outside the bounds of its training data, rather than hallucinating an answer, it will trigger the involvement of a symbolic, rule-based AI.

The symbolic portion itself is trained with high-level, rule-based logic in a fuzzy-logic-system.

If this still does not reduce the uncertainty enough to fit a known pattern, then a combined deliberation process to come to a joint decision is used. I envision this to be either a distributive cognitive network, or the point at which a human doctor would weigh in, as a form of supervised learning.

D) Comparing this to diagnostic reasoning

How do I know this will work? I don’t. But I have a pretty good guess because this is exactly how a physician’s diagnostic reasoning works on the medical wards.

Humans are infinitely variable, and so clinicians use both pattern recognition and deliberative logic to come to the right diagnosis.

It is rare to see a “text-book presentation” of disease, with most patients presenting with only a loose resemblance to the data that we were trained on in medical school. We are all unique in our own way.

As well, patients not only present differently from one another, but they can also present differently at different time points in their own disease. This means there is variability not only between individuals, but also within the same individual depending on their position in time. Yay.

This creates an astounding amount of uncertainty and variability in the data - essentially needing to compute reality in 4D - and yet an expert physician can shift through this quagmire to come to a conclusion - either a diagnosis, or an idea of the next steps needed to further investigate the disease.

“All kinds of knowledge are competing for attention. In clinical consultations, not everything is or can be taken into account; there is limited time, our brains do not process everything, we are forgetful and the ‘whole’ story is not told to us. ”

It is by breaking this process down into something resembling simplicity, that we realize we are diagnosing using a hybrid-model of computational thinking. Just like above, we:

1) First see use our expert pattern recognition to see if the data fits well with what we’re expecting to see, based on what we know and have seen before (like a neural network). This is the bucket I’d call “typical disease”.

2) If the patient doesn’t seem to quite fit into a typical disease, we sense something is off and use our deliberative minds (like symbolic logic), to retrace our steps, reanalyze the data, and think more deeply about what we’re seeing.

3) If we still can’t figure it out we call in extra support - our colleagues - who have similar but different information and experiences in their own minds (like a distributive network). This allows us to (hopefully) diagnose accurately all 3 high-level types of disease - typical, odd, and definitely different disease.

E) Putting it together

In sum, without the hybrid model in decision-MD AI we will invariably have errors as the neural network applies patterns to situations that don’t quite fit (the odd and definitely wrong), hallucinating answers which could be deadly when dealing with disease.

However, we need to use neural networks because symbolic AI alone will never be sufficiently trained given the infinite variability of humanity.

We therefore need a tacit-based-explicit-verified AI that can analyze the information, combine this with additional investigations, and when uncertainty is still out-of-bounds, call a colleague.

4) DECISION-MD AI WILL NEED TO BE DEVELOPED GLOBALLY BUT IMPLEMENTED LOCALLY

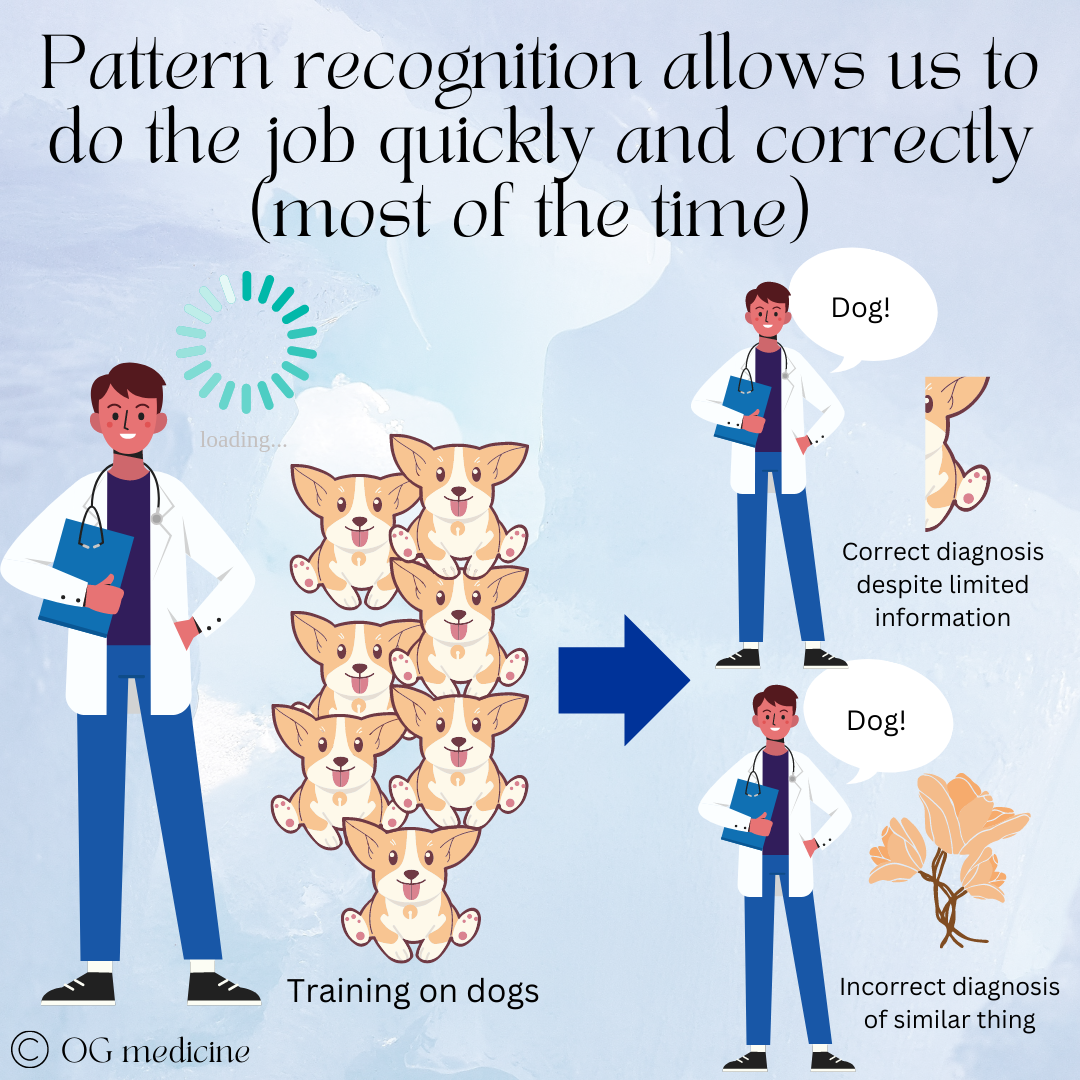

Here’s the rub: our tacit knowledge is based on experience with hundreds or thousands of individual cases, from which we develop pattern recognition that allows us to move from novice to expert. An obvious result is that the things we see more of become the strongest patterns in our mind. This is helpful, because it makes us fast and effective. This is also a problem, because it makes us biased.

Doctors become experts when they’ve seen hundreds of cases of the same thing, developing strong pattern recognition and clinical skills. This enables them to make accurate diagnoses quickly, often with incomplete information. However, it can become a problem if they incorrectly apply the pattern to something that is meaningful different.

For example, if we mostly see caucasian patients, we are not very good at picking up skin cancer in persons of colour. This is not a product of doctors being intentionally racist. This is a consequence of the way pattern recognition develops. We don’t recognize the cancer because it doesn’t look like the pattern of cancer that we are familiar with, and we will say “normal!” even when it’s disease.

The opposite case occurs in South Africa. During my course on Healthcare Policy and Organizations at Oxford I was talking to a research fellow who is studying the problem of racial bias in South African doctors. There, physicians struggle to diagnose people with lighter skin tones correctly because most of the textbooks show disease on darker coloured skin. Neither of these two cases is okay, one is historically more problematic, and both are still happening.

Thus, what we see (our training data) informs what we make of new information that we receive.

The same problem therefore quite obviously occurs in AI, with biased AI becoming a hot topic in the news.

Just like humans, neural networks learn based on what they are shown, and if the data under-represents or misrepresents certain groups (i.e. only shows dogs, never flowers), it will not develop strong pattern recognition or develop the wrong pattern recognition for those circumstances.

There’s the old adage that goes something like “if you go to a surgeon he tells you it’s a surgical problem; if you go to an internist he tells you it’s a medical problem”. Our minds, and our AI, are geared to see the patterns that we’ve trained them to see.

This is why we need to train our AI on the tacit knowledge of physicians from all over the world, i.e. develop the AI globally.

Doctors from populations with higher proportions of different race, ethnicity, gender, sex, age and rare diseases will have the strongest pattern recognition expertise in accurately diagnosing and treating individuals from those groups.

Integrating their tacit knowledge into the “collective pool” of tacit knowledge will enable the AI to be better than doctors who have under-developed patterns based on their training.

By gathering stories and observing doctors from all over the world, decision-MD AI will be able to accurately diagnose all conditions in all people, rather than mistaking a dog for a flower because it has met only a few flowers before.

This will help solve the issue of bias, which will be a huge step forward in ensuring equal access and care for all.

Gathering the tacit knowledge from the global community of physicians also ensures that all medical knowledge is valued and respected.

It is incorrect and frankly antiquated to assume that tacit knowledge from North American doctors would be sufficient to care for people in other countries. Local variation in epidemiology, cultural customs, socioeconomic needs, and healthcare resources makes the local doctors the experts, not the physicians flying in to “help”, even if they come with their extensive diagnostic tools and medications.

A real-life example of this occurred during my medical school training.

I did a one-month elective in Peru with an outreach team to provide medical care to under-serviced populations. Although we worked with local partners, Canadian physicians were still providing most of the care. One of my colleagues was exposed to cavitary TB while caring for a patient with a chronic cough, because in Canada we don’t think TB when someone is coughing for a while, we think COPD, GERD, asthma, etc. In Peru, a cough for more than 2 weeks is TB until proven otherwise. (You can read my peer-reviewed publication on this topic and the need for adequate training, cultural competency, and ethical and sustainable practices in medical outreach here).

This example highlights not only the fact that physicians in other countries have extremely valuable tacit knowledge to share, but that local practice variation will need to be taken into account by decision-MD AI. The global knowledge can be used to better care for groups that are otherwise under- or misrepresented locally, but the remainder of the tacit knowledge used by a whole-MD AI will need to come from the local physicians serving that particular population.

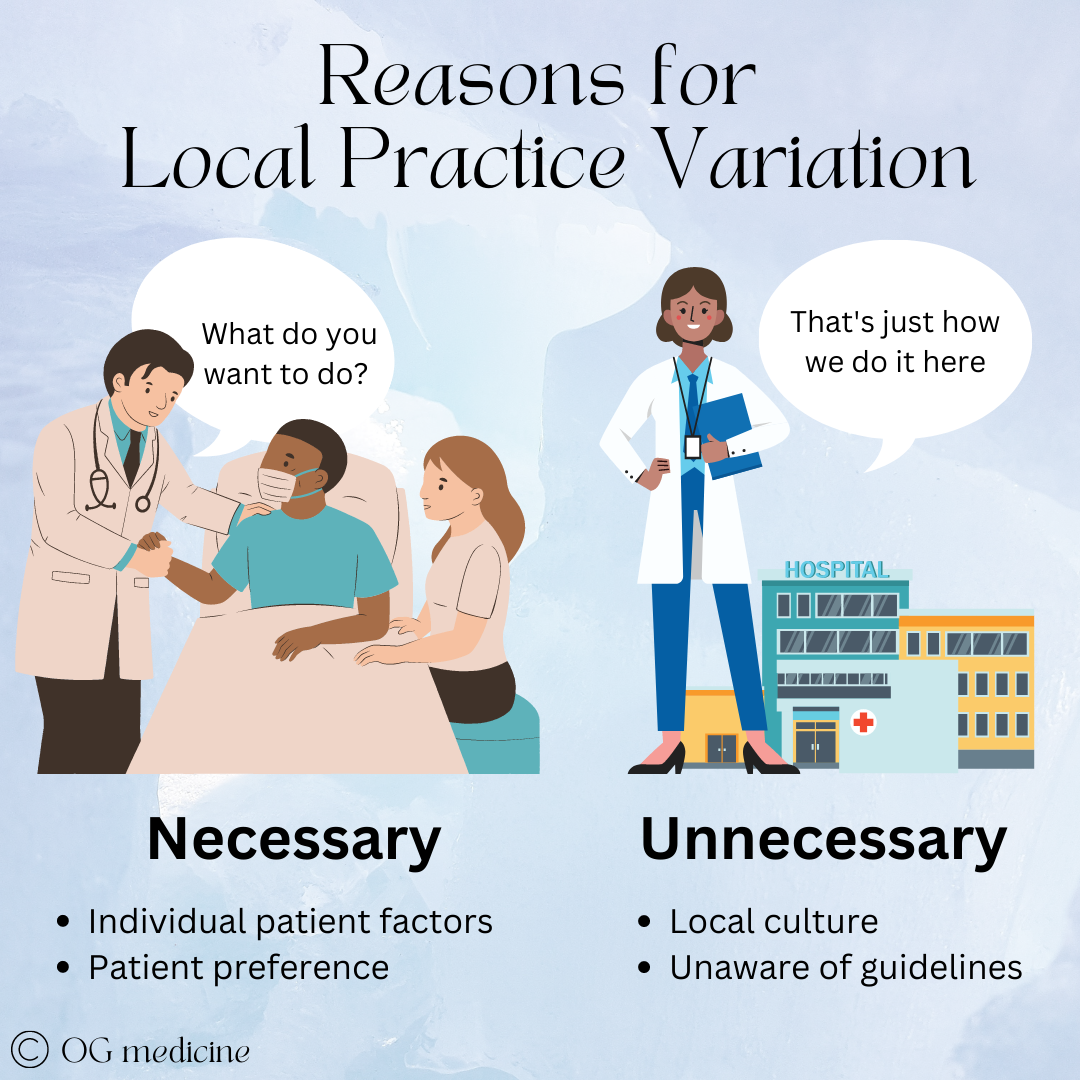

The reasons for practice variation are two-fold:

Necessary practice variation - Patient populations and preferences are genuinely different in different places (even within the same city sometimes!)

Unnecessary practice variation - Physicians practice differently because of cultural patterns and lack of- or ignorance of- clinical guidelines.

The first will always need to be respected and taken into account by decision-MD AI, while the later will need to be taken into account initially to gain acceptance by physicians. Eventually this can be eliminated and may actually serve as a teaching tool for physicians.

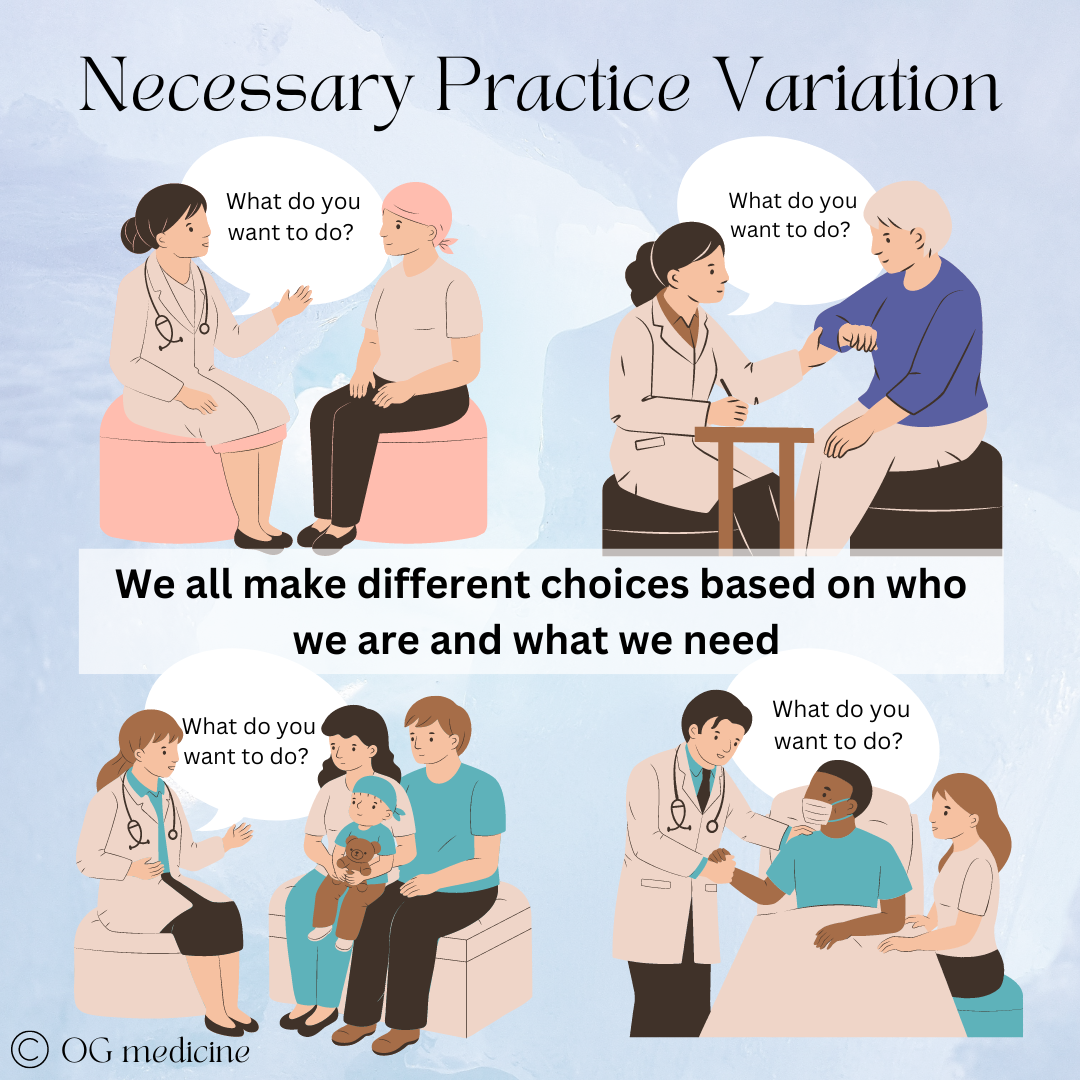

Necessary practice variation

Medicine is practiced slightly differently in different places. Some of this variation is necessary based on variation in the population’s biopsychosocial context and patient preferences.

For example, within the same city of Hamilton, Ontario, there are practice variations in terms of the length of stay for blood stream infections between the Hamilton General Hospital (HGH) and the Juravinski Hospital (JH). During my internal medicine training, when patients were admitted with sepsis at HGH they often remained admitted for the full 4-6 weeks to receive IV antibiotics, even though this could be done at home. In comparison, at the JH, patients would often be admitted for a week or so to be stabilized, and then once improving, be discharged home with IV antibiotics administered by a home-care nurse. What gives? Are the doctors just bad at HGH?

No, of course not. Every pattern that you see has a reason (remember that, we’ll talk about it in a future blog). In this case, it had to do with the difference in the biopsychosocial context of the patients who lived nearby HGH. In that area, patients are often living with addictions and IV drug use. The infection was introduced through IV drug use, yet the treatment required a PICC (peripherally inserted central catheter), to be kept in place for the full 4-6 weeks of treatment. The treatment actually made it easier to continue with the very thing that caused the infection in the first place. Patients themselves would choose to stay in hospital with the support of the physicians as a risk-reduction strategy to avoid using the PICC line recreationally.

On the other hand, the patient population that is typically admitted to the JH tends to be older, more affluent, and immunosuppressed because JH is the regional cancer centre. These patients get sepsis because of their age/frailty/comorbidities, and having a PICC line at home doesn’t pose a hazard to their health, while staying admitted to hospital is usually not desirable.

A hospital administrator who looked at these differences from 10,000 feet might think the practice variation should be standardized, knowing that longer lengths of stay are expensive. In fact, both hospitals are doing the right thing for their patients, and by preventing recreational PICC use, the physicians at HGH are reducing repeat admissions and likely morbidity and mortality. This is necessary variation, and a decision-MD AI will need to account for this.

Despite the obvious necessity for patients, this is also necessary for the success of decision-MD AI. When decision-MD AI is initially deployed, it will be judged by physicians. If it does not make the same clinical decision that they would have made for that particular patient, they will not support its use and this resistance will become a bottleneck to the necessary capacity building that AI offers.

So, solution #2 is: the decision-MD AI will need to be trained predominately on the tacit knowledge of the doctors where it will be deployed, combined with a backdrop of global knowledge to reduce bias. This could mean each hospital, each clinic, each province, or each country (trial and error will have to decide how micro the training needs to get… could it even be for each individual doctor?).

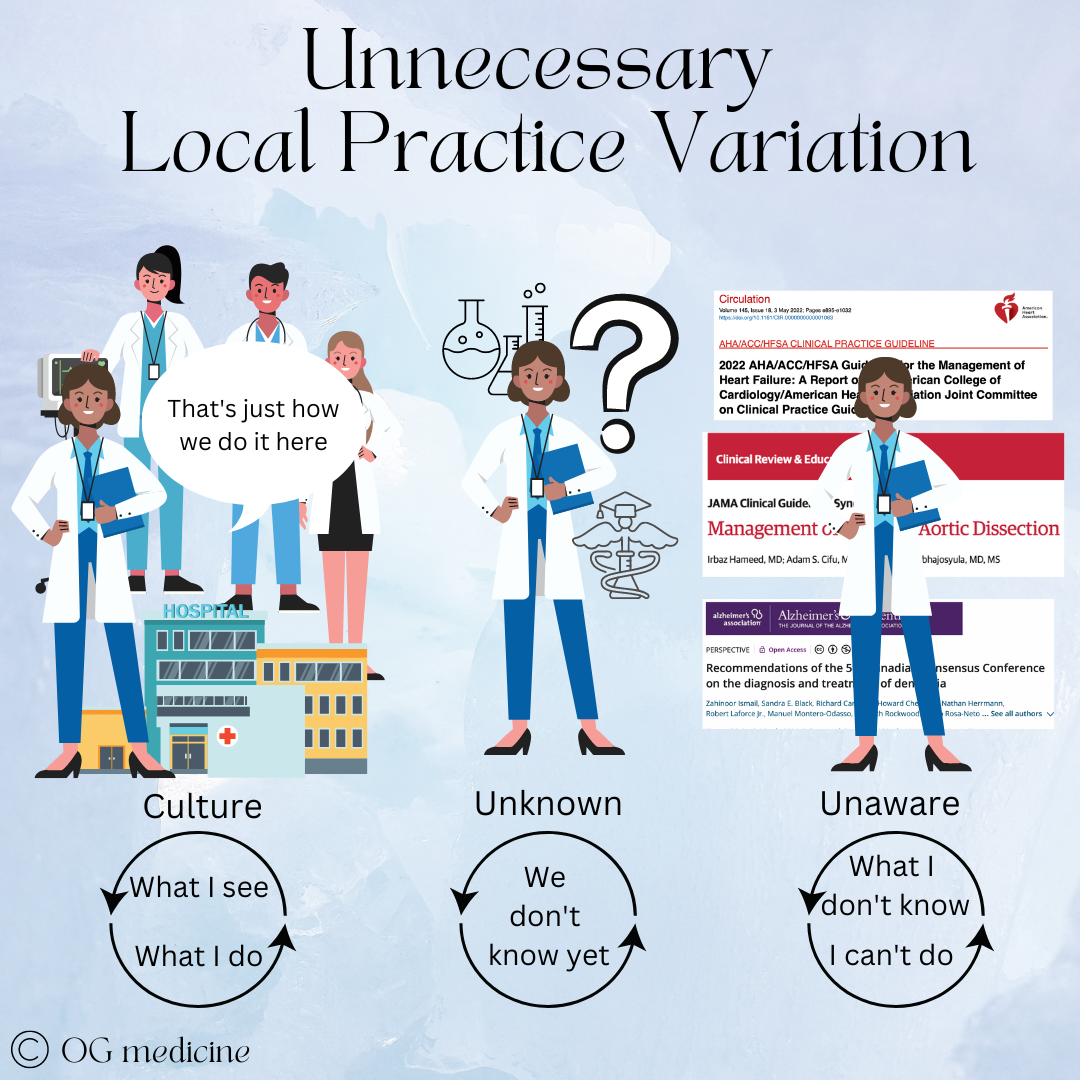

2. Unnecessary practice variation

On the other hand, unnecessary practice variation presents an opportunity for decision-MD AI to out-do the doctors, eventually. As I’ve said, initially physicians are going to be a bottleneck to the adoption of decision-MD-AI in healthcare. It’s just a fact. One of the biggest things they will look at is - did the AI suggest what I would have done? If the AI makes suggestions that don’t fit local practice patterns, whether necessary or not, then the doctors are not going to trust its decision-making, unless it can explain itself.

First, let’s understand where unnecessary variation comes from. Technically, it’s not completely understood, but I think it can be simplified into a) cultural patterns and b) lack of- or ignorance of clinical guidelines.

When medical practice is different in different places not due to patient factors or preferences, it’s due to cultural norms (“what we do here”), lack of guidelines (still researching), or ignorance of existing guidelines that could inform best practice.

For example, during my internal medicine training I did various elective rotations at hospitals around the country in geriatric medicine. I noticed a really interesting thing with the prescribing of a sleep medication, Trazodone, in hospital.

In Hamilton, where I mostly trained, Trazodone was not used very often. We used melatonin (pretty harmless), and just didn’t really give other drugs for sleep. In Ottawa, I saw really low-doses (and therefore likely also not too harmful) use of Trazodone all the time. This was local practice variation, but the patients and their preferences were not any different.

The simple reason for this is that physicians practice based on what they’ve learned in training, combined with brief reading of new guidelines, and mainly interactions (i.e. experiences) with other physicians, their patients, and pharmaceutical reps (Gabbay and le May, 2004). In this case, there were no clear guidelines on what to use for sleep in hospitalized patients, and Trazodone use had been normalized at Ottawa, while melatonin was the preferred drug in Hamilton, based on what everyone else was doing.

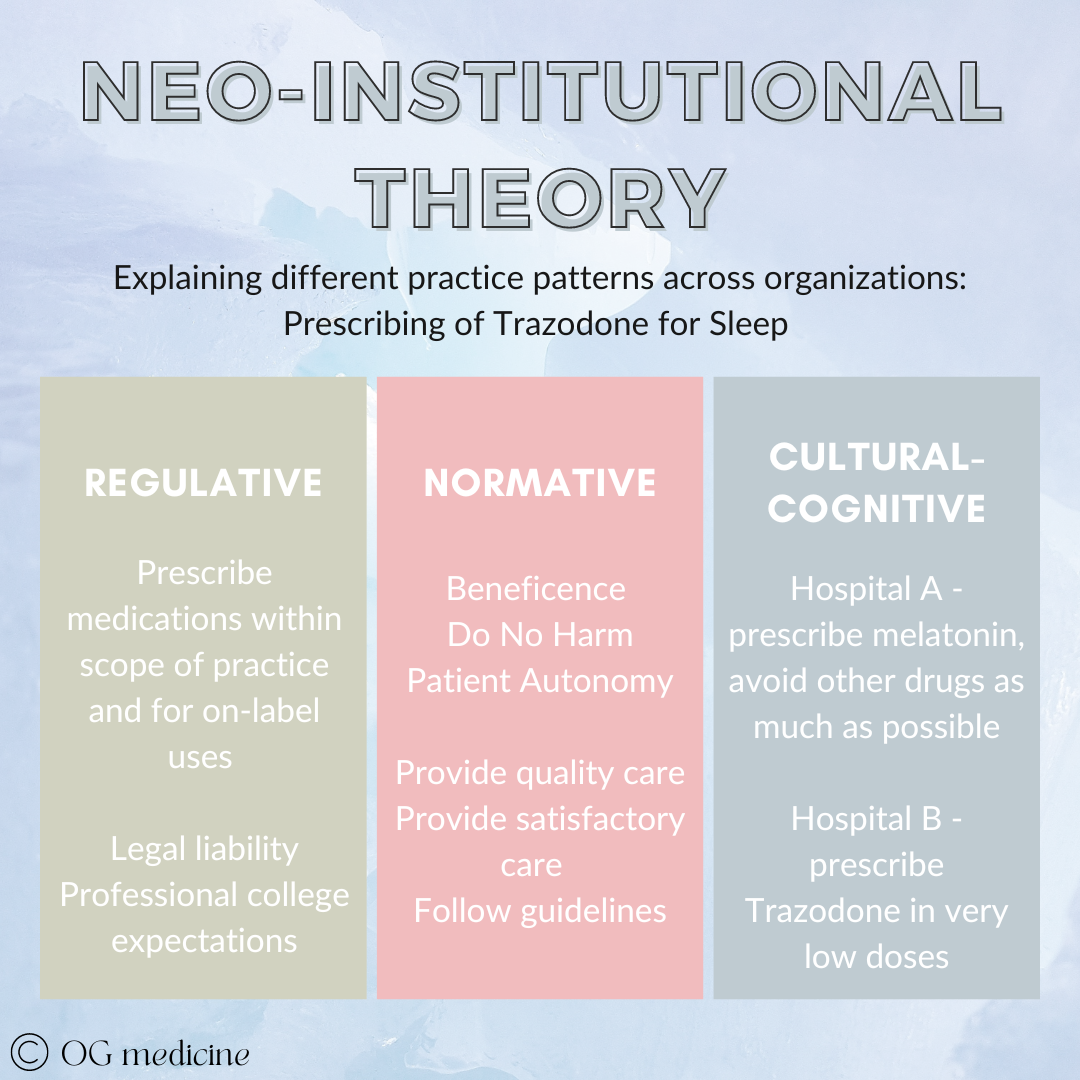

The more complex understanding of this can be mapped onto Neo-Institutional Theory of organizational behaviour. Stay with me here. The theory goes that there are 3 things that drive our behaviour in an organization (i.e. any local group). 1) What we must do - the rules and regulations; 2) What we think we should do - the professional standards and norms; and 3) What we actually do - what has become part of our unquestioned culture.

The case of Trazodone would look something like this:

Local practice patterns develop for a number of reasons. While most physicians are grounded in the same regulative and normative standards, local culture may differ, resulting in different actions.

Why do you get different cultural prescribing if regulative and normative values are the same? Drawing from across social network theory, mindlines, communication studies, complex adaptive systems (my favourite!) and marketing, I think cultural behaviours can be explained by 1) opinion leaders and 2) prevalence.

Essentially, if peer opinion leaders (people that are similar in rank and experience) and expert opinion leaders (people who others look to for the final word) both start doing the same thing, that thing spreads amongst the network of physicians to reach a prevalence that becomes the visible cultural norm. Ta-da.

So, in Hamilton, we had different opinion leaders (McMaster University geriatricians) doing different things than the ones in Ottawa (Ottawa University geriatricians), which led to different prescribing behaviours being propagated and reaching prevalence in the network. We had the same regulative and normative values, but at the end of the day, the physicians were different, and therefore the treatment was different.

While no harm was caused to patients in this example, this isn’t always the case. Sometimes there are clear guidelines that people don’t know about or agree with (otherwise they would follow them, as part of the normative values of physicians) and doing things differently actually causes either more harm to patients or unnecessary resource drain on the system.

Decision-MD AI has the potential to correct this unnecessary practice variation by having an explicit guideline-based scaffold in the background.

So, solution #3 is: when treatment is suggested that differs from local practice variation not because of patient factors or preferences but because of unnecessary variation, the decision-MD AI will explain why it’s made this suggestion.

As simple as a ‘did you know about the latest guideline?’ or ‘there’s no difference between these drugs efficacy, and this one is cheaper’. The physician can then choose to override (again, we need AI to be accepted at first!), but as trust is built and AI becomes normalized in the same way that Trazodone prescribing was, the AI will start to become the final word.

Finally, how on earth will the AI know whether it’s necessary or unnecessary variation in the tacit knowledge that it’s collecting? This is where the explicit symbolic AI system comes in again. As part of the decision-MD AI’s training, when decisions are suggested that differ from what the physician would do it will ask why it was overridden. Reasons could include patient preference and patient factors (necessary); personal practice, no guidelines, and local practice (unnecessary). This can then be integrated into the algorithm going forwards.

Eventually, both physicians and the AI will learn, and the AI can start to make finite decisions, enabling it to be covered under its own medical license, just like a human doctor.

5) DECISION-MD AI SHOULD BE ABLE TO OBTAIN AN INDEPENDENT LICENSE AND MALPRACTICE INSURANCE LIKE A HUMAN DOCTOR

One of the greatest challenges to AI will be in its regulation and legal liability. Even if we develop a useful AI (points 2-4), we won’t be able to save our healthcare system if it does not pass a country’s regulatory requirements, or if doctors, patients, or the healthcare system won’t use it for fear of litigation.

Given point #1, in which I argued AI is the only thing that will save the healthcare system within our lifetime, there is an impetus to find new ways of regulating and legalizing AI in a feasible manner that balances safety with reality.

A) Medicolegal Liability

If the AI makes a mistake, who is responsible? The company who owns the AI? The doctor who used the AI in their practice to augment their decision making (such as in clinical decision support tools), or the patient who chose to interact with the product?

I believe the solution is to legally view the AI differently depending on what stage of development it is in, just as we treat human doctors differently at various stages of medical training. For example:

Stage 1 - medical student - this is when the AI is trained on explicit knowledge, and does absolutely nothing on its own. Most ideas that it will come up with are “right” on paper, but “wrong” in practice. It’s missing the context and tacit knowledge. All current “decision-MD-AI-wanna-be’s” in the world are at this stage, because they are only using explicit knowledge (as explained above). This AI is the responsibility of its maker.

Stage 2 - resident - this is when the AI is being trained on tacit knowledge, and it is supervised in its experience while also being given more responsibility so that it can learn on its own. This would look like a clinical decision support tool, where eager early-adopter physicians agree to work with the AI to check its clinical gestalt as it watches and learns from more and more tacit knowledge.

At this stage, the AI will need some type of legal cover so that the physicians working with it are protected. In real-life, residents pay for their own medical coverage through the CMPA so that if anything goes wrong while being supervised by an attending physician, both the resident and physician have legal representatives.

Stage 3 - independent practice - after receiving commendations from a to-be-determined number of physicians and writing the usual royal college licensing exam (which is actually just explicit knowledge, and could be done at stage 1, but would not translate into useful clinical decision making without the tacit stage), the AI will be given its own independent medical license and malpractice insurance. It will be required to do continuous medical eduction (CME) on explicit knowledge, just as physicians are, and it will be judged by a jury of its peers should it make serious mistakes.

In essence, the AI will be treated like a real doctor, because that is precisely what it has been trained to be, just like its human counterparts.

Inevitable Legal Action

We must also be prepared for the fact that the AI will mess up and will get sued, just like how all human physicians are told as medical students that they will make mistakes one day and get sued. Seriously. It happens to every doctor because we’re only human, and ultimately the AI will be trained on human knowledge.

We must accept and expect imperfection. In stage 1, the AI will mess up all the time. In stage 2, it will mess up a lot of the time, and in stage 3, it will still mess up sometimes.

That being said, as the AI “practices medicine” it will rapidly become more proficient, because it will be able to see thousands of patients in the same moment and learn from its mistakes, while a human doctor sees a thousand patients over a span of several months. As long as the AI has access to feedback (i.e. the patient returns to tell it what is happening, just like a follow-up appointment with a physician), it will improve itself over time. Theoretically, the AI could reach a point of perfection, because unlike us, it’s more than human.

B) Regulatory Approval

Before a healthcare product can be used in a country it must pass regulatory requirements. Specifically, as a Software as a Medical Device (SaaMD), decision-MD-AI will need to be approved by Health Canada in Canada, or the FDA in the USA, MHRA in the UKA, EMA in the EU, etc, etc.

Two crucial challenges to this process are:

Decision-MD AI will constantly evolve and learn, just as a doctor constantly learns as new research and wisdom become available, unlike other SaaMDs which are approved based on a finite, “static” state.

Decision-MD AI will not be able to 100% explain how it arrived at a diagnosis because it is tacit-based (which is more than words alone), despite regulators calling for explainability.

Regulators are going to have to accept these issues if we want to save our healthcare system from collapse. Here’s why:

1) Evolving product

Traditionally, SaaMD is approved based on a static state, not a dynamic state like continuous self-learning algorithms. Just like how medications require amendments to their regulatory approval for different indications, decision-MD AI could in theory require regulatory amendments when it “meaningfully” changes.

While on the surface this might seem like a reasonable request this is not feasible in reality. Regulatory approval takes months and an AI that is providing healthcare to thousands of patients cannot be put on hold while regulators (who often don’t have medical expertise) look things over.

Simply put, once a decision-MD AI is approved, it will always need to be approved. It will change and adapt as a natural consequence of continuous learning, just like a doctor does. If there are signals of harm or actual harm, then it can be ordered to undergo additional training or sued and judged by a jury of its peers, like human physicians. However until its license is revoked, regulatory approval should not be removed.

Again, decision-MD AI is the only way our system can avoid collapse. We have to make some tradeoffs here, which includes taking some calculated and well-intentioned risks. Yes, we’ll need to figure out an “early warning” system to flag if the AI is causing harm, and there will need to be agreement across legal and governmental institutions. Yes, I’m describing a solution that will need a whole societal effort to achieve.

If health is truly a fundamental right then we need to pull together to make decision-MD AI a legal and regulatory possibility.

2) Explainability

Lastly, decision-MD AI should only be expected to explain itself to the same degree as a human doctor. What people outside of medicine don’t realize is that doctors often can’t completely tell you why they did the thing that they did. We can tell you when it’s clear-cut; we fixed the leg because it was broken. But we can’t tell you when it’s “fuzzy”; we gave lasix at the same time as the pRBC because the patient is a bit on the older side and tends towards heart failure and there was just a gut feel to it. Gut feel = tacit, and tacit = more than words. We can approximate our thinking, but we can never fundamentally explain it using words alone.

“We know more than we can tell”

This inexplicability phenomenon can be demonstrated by the real-life example of two groups of medical students; one more explicit-trained, and one more tacit-trained.

Medical students from the University of Toronto are highly trained on explicit knowledge (lots of lectures, lots of memorization, lots of exams). They are phenomenal at answering questions like “give me 10 diseases in the differential for retrosternal chest pain”.

On the other hand, if you ask a McMaster medical student the same question, they might only know one or two diseases. Instead of reams of lectures, they were taught on tacit knowledge via case-based-learning in small-groups (as far as I’m aware, current medical institutions don’t realize that tacit knowledge transfer is the fundamental mechanism that their using).

Yet, if you put a patient with retrosternal chest pain in front of the these two medical students, the McMaster student will know how to medically manage the patient while the Toronto student will struggle to figure out what to do. This is the explicit-tacit dilemma in action, where the McMaster student has learned how to be a doctor without necessarily learning all the what. However most importantly to this argument, when you ask the McMaster student how they know what to do, they won’t really be able to tell you. This is the mark of tacit knowledge. It cannot easily be put into words. It is knowing beyond description.

This is precisely the knowing that AI will need to achieve in order to be clinically useful, and therefore it will need to be given regulatory approval despite not being able to fully explain how it came to its answer. Regulators need to be prepared for this eventuality. Useful decision-MD AI and explainability are antithetical to one another.

That being said, the explicit portion of the AI will be explainable, and in particular, the fuzzy logic system that I proposed earlier involves an explicit algorithm that can be inspectable by regulators. The AI should be able to give an outline of its thinking (as the doctor above did when prescribing lasix), but the exact linkages between concepts will remain tacit.

In Closing

I hope you enjoyed this post on AI in healthcare. As with all ideas in the world that have come before and that will come after, this is a work in progress.

Here’s a snapshot of the key points by OG Medicine in 2023.

We need AI if our healthcare system is to avoid collapse

Training AI off tacit knowledge is the key we’ve been missing

Using the best of symbolic and cybernetic AI will get us closer to true whole-MD AI

Global tacit knowledge can help prevent bias in the AI, but most of the knowledge will have to be trained locally (each city, hospital, clinic, etc).

We need to give AI it’s own medical license

Thanks for reading, and I hope you take this information to build the future AI that healthcare needs.

Olivia

Dr. Geen is an internist and geriatrician in Canada, working in a tertiary hospital serving over one million people. She also holds a masters in Translational Health Sciences from the University of Oxford, is widely published in over 10 academic journals, and advises digital healthcare startups on problem-solution fit and implementation. For more info, see About.

Influences:

1) Life experiences and deep thinking.

2) Random books that triggered random ideas that slowly percolated over time.

3) Boden, M. Artificial Intelligence: a very short introduction. Oxford University Press, 2018.

4) Conversations at Kellogg College, Oxford, with various postgraduate students in software engineering, machine learning, and translational health sciences.

5) Gabbay J, le May A. Evidence based guidelines or collectively constructed "mindlines?" Ethnographic study of knowledge management in primary care. BMJ, 2004;329(7473):1013.

6) Wieringa S, Greenhalgh T. 10 years of mindlines: a systematic review and commentary. Implementation Science, 2015;10:45.